|

|

Research HighlightArtificial Intelligence: Can It Improve VA Healthcare?Key Points

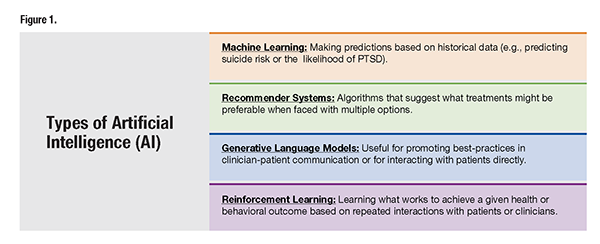

It seems that every day the media comments on a new wave of artificial intelligence (AI) technology and its influence on fields as varied as basic science, education, entertainment, and business. New courses are popping up across university campuses to help researchers and students understand what is happening in this fast-moving field. Some of the buzz around AI is focused on fears about its potential risks. Will AI replace human workers? Will AI weaken social networks? Will AI increase economic disparities? Will AI give a few technologically savvy moguls disproportionate economic power? Excitement about AI’s potential is well justified – as are many of the concerns surrounding its impact on our lives. But given the hype and the pace of progress, conversations are often hampered by an inexact sense of what we’re actually talking about. For those of us dedicated to improving Veterans’ health, it’s important to have a concrete understanding of what we mean by “AI” and how it might improve the quality, efficiency, and accessibility of VA care. Here, we briefly present four examples of AI that hold promise for VA research and clinical practice (see Figure 1). Machine LearningMachine learning (ML) is often used as a synonym for “AI.” In other contexts, experts use the term ML to mean the use of datato make better predictions, like whether the stock market will go up, or whether an incoming email should be sent to spam. In healthcare, ML methods have attracted interest due to their potential for using clinical information to make more accurate diagnoses (e.g., identifying malignancies) and predictions (e.g., will this elderly person have an injurious fall). Important for VA, researchers are learning how to use ML and health record information to identify patients at risk for suicide who are missed by standard VA prediction models and therefore do not receive enhanced suicide prevention care. ML could identify patterns in these patients’ data causing them to fly under the radar when in fact they need urgent help. A recent VA study highlights the potential for ML to improve these predictions, ensuring that Veterans who need preventive care receive potentially life-saving treatment.1 Recommender SystemsMost of us interact with recommender systems such as Netflix or Amazon in our daily lives. Often, we find that recommendations are spot-on, even though the platforms we’re interacting with don’t ask us whether (for example) we like romantic comedies, horror flicks, or documentaries – and never ask for information such as our age or gender. While research on recommender systems in healthcare is still in its early stages, these systems could improve the patient centeredness of care in situations where Veterans and clinicians are faced with numerous options. Imagine a patient with diabetes and multiple comorbidities – should they prioritize weight management? Smoking cessation? Depression? Home glucose monitoring? Although clinicians try to balance these priorities while respecting patients’ preferences, they typically receive little guidance as to the optimal approach for enhancing patients’ health outcomes. Recommender systems could help clinicians and Veterans set priorities for treatment using some of the same algorithmic approaches that Netflix uses to suggest the perfect movie. Generative Language Models (GLMs)GLMs burst onto the scene in recent years with ChatGPT and other tools making online searching and text generation possible without the need to communicate via programming languages. But can GLMs be used to improve Veterans’ healthcare? The answer is almost certainly yes. The following paragraph describes just one example. Solid evidence has shown that specific communication strategies, such as motivational interviewing and cognitive behavioral approaches improve patient engagement, behavior change, and health outcomes. Unfortunately, the quality of patient interactions is uneven, and many clinicians lack feedback that could help them learn how to have more productive discussions. GLM-based trainings could be a scalable way to enhance clinician abilities, with studies suggesting that such training improves clinicians’ use of evidence-based communication skills.2 For some patients, automated GLM ChatBot messages constructed to reflect communication best-practices may increase access to information and support even when clinicians are unavailable. Reinforcement Learning (RL)RL is a field of AI in which an AI system “learns” to make decisions over successive interactions with an “environment” (in this case a Veteran patient or clinician) that provides repeated feedback allowing the system to improve decisions based on experience. As one example, our research team tested whether an RL system could improve the efficiency of VA telephone cognitive behavioral therapy (CBT) for Veterans with chronic pain. Rather than delivering a standard course of CBT consisting of 10 weekly 45-minute sessions, the system chose each week between a standard session, a brief 15-minute booster session, or digital support only. We found that compared to the standard course of treatment, the RL-supported treatment achieved similar outcomes while requiring only half the therapist time.3 Moreover, patients who received the RL-supported care were less likely to drop out of treatment or miss sessions, suggesting that RL programs could increase treatment acceptability. This trial is just one example of how RL-supported treatments could increase the effective use of VA services, increasing VA’s ability to treat Veterans with the highest need. The Vital Role of ResearchWhile excitement about AI is at an all-time high, some clinicians, policymakers, and patient advocates are worried. Will AI systems increase disparities in patient access or outcomes? If algorithms are poorly specified, developed using non-representative datasets, or implemented without ongoing oversight, they certainly could. To address these and other concerns, VA research that rigorously evaluates AI’s impact on treatment and outcomes will be essential. In June 2024, VHA announced the creation of the Digital Health Office (DHO) to be the central coordinator of technology-focused components, including AI. DHO’s goal is to enhance partnerships across VA to foster innovation and quicken the translation of effective programs into practice. VA health systems researchers will be key partners with DHO in this process. Through these efforts, VA can embrace the great potential of AI for improving Veteran health, while addressing potential risks.

References

|

|